AZ-900: Cloud Concepts - Scalability and Elasticity

Cloud Concepts – Scalability and Elasticity

Now that we have a base understanding of how we got here from the AZ-900 Series Part 1: What is Cloud Computing? let’s talk about two of the key benefits which cloud computing provides – scalability and elasticity.

The demand for infrastructure resources – compute, storage, and network – are often not static in nature. Users sometimes access websites more often at certain times of the day. When high-traffic events, such as the Superbowl or a World Cup, happen, the demand placed on services offering up content increases, and so does the consumption of the underlying CPU, memory, disk, and network in relation to this.

However, even when you aren’t using underlying resources, you are often still paying for them. A good example would be a virtual machine (VM), where you’re paying monthly for a specific VM size to be running (e.g. 2 CPU, 4GB of memory), and you will continue to pay the monthly charge regardless if you are running those CPUs at 100% or not. Consider applications in the enterprise where you might want to run reports at a certain time of the week or month. Naturally, at those times, you will require more resources; but do you really want to pay for the larger machines or more machines to be running all the time? This is a major area where cloud computing can help, but we need to take into account the workload. Scalability and elasticity are ways in which we can deal with the scenarios described above.

Scalability is our ability to scale a workload. This could mean vertical scaling (scaling up or down), as well as horizontal scaling (scaling out or back in).

Scaling up, or vertical scaling, is the concept of adding more resources to an instance that already has resources allocated. This could simply mean adding additional CPU or memory resources to a VM. More specifically, perhaps in response to a bunch of users hitting a website, we can simply add more CPU for that day, and then scale down the CPUs the following day. How dynamically this can happen depends on how easy it is for us to add and remove those additional CPUs while the machine is running, or the application team’s ability to take an outage. This is because vertical scaling typically requires a redeployment of an instance or powering down of the instance to make the change, depending on the underlying operating system. Either way, the benefit of doing this in Azure is that we don’t have to purchase the hardware up front, rack it, configure it etc. Rather via clicking in the Azure portal or using code, we can adjust for it. Microsoft already has pre-provisioned resources we can allocate; we begin paying for those resources as we use them.

Horizontal scaling works a little differently and, generally speaking, provides a more reliable way to add resources to our application. Scaling out is when we add additional instances that can handle the workload. These could be VMs, or perhaps additional container pods that get deployed. The idea being that the user accessing the website, comes in via a load balancer which chooses the web server they connect to. When we have increased demand, we can deploy more web servers (scaling out). When demand subsides, we can reduce the amount of web servers (scaling in). The benefits here are that we don’t need to make changes to the virtual hardware on each machine, but rather add and remove capacity from the load balancer itself.

In Summary:

Scaling Up or Vertical Scaling = Add resources to existing instances.

Scaling out or Horizontal Scaling = Add more instances

If scalability is our ability to scale up or out, what is elasticity?

Elasticity follows on from scalability and defines the characteristics of the workload. It is the workload’s ability to scale up and down. Often you will hear people say, “Is this workload elastic?”. Elastic workloads are a major pattern which benefits from cloud computing. If our workload does benefit from seasonality and variable demand, then let’s build it out in a way that it can benefit from cloud computing. As the workload resource demands increase, we can go a step further and add rules that automatically add instances. As workload resource demands decrease; again, we could have rules that start to scale in those instances when it is safe to do so without giving the user a performance impact.

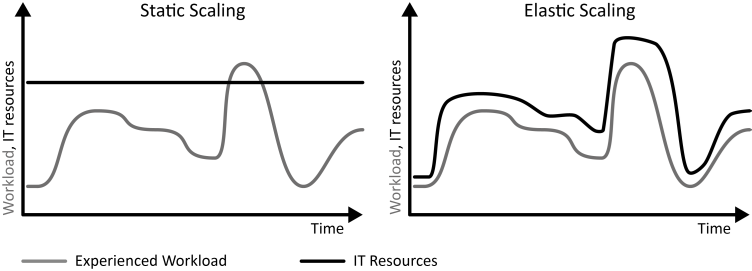

The big difference between static scaling and elastic scaling, is that with static scaling, we are provisioning resources to account for the “peak” even though the underlying workload is constantly changing. With elastic scaling, we are trying to fine-tune our system to allow for the resources to be added on demand, while ensuring we have some buffer room.

Once again, Cloud computing, with its perceived infinite scale to the consumer, allows us to take advantage of these patterns and keep costs down. If we can properly account for vertical and horizontal scaling techniques, we can create a system that automatically responds to user demand, allocating and deallocating resources as appropriate.

For additional best practices on Azure autoscaling go to https://docs.microsoft.com/en-us/azure/architecture/best-practices/auto-scaling

Enroll in the AZ-900 today and start your path to becoming certified in Azure Fundamentals